Summary

I am interested in too many things, and there are a lot of great content creators constantly releasing great videos. I used to subscribe to nearly 200 channels on YouTube. I have gained a lot of knowledge and had fun watching videos from them. However, I have started to realize that I have spent quite some time watching these videos, probably too much, to be honest.

Why summarize YouTube videos

I spend quite a lot of time watching YouTube videos, probably over 1 hour per day on average, as there are indeed some great content creators who continually release high-quality videos of my interests. The catch is that I often don’t stop after watching the content from the channels I have subscribed to. I watch many more, as the algorithm has been tailored to keep you on the website as long as possible by recommending similar videos. This is good for users to find new videos and channels, and I did find most of my now-favorite channels in this way. That said, for my own usage, I have already collected a set of channels that I would like to watch on different topics, such as general science, astronomy, photography, news commentary, mathematics, outdoor activities, finance, etc. The value of exploring new channels has decreased a lot. More often than not, I was disappointed in the recommended content from newly discovered channels. I would like to watch the content from my subscribed channels most of the time and only explore other channels occasionally.

In addition, not all the videos from my favorite channels are interesting either. This may be due to the fact that I am not interested in all the topics covered by a channel, or the quality of the videos is not consistent. It would be too much to ask for consistent high-quality content from online content creators, many of whom just work on this part-time. I would like to have a quick preview of the content and decide whether to watch it or not.

So, what I need is an application that can check the updates from a list of YouTube channels and provide me a summary of each new video before I browse this website and may spend, or say waste in some cases, a significant amount of time there. To achieve this, we need to implement two functions: 1. a function to summarize the content in a YouTube video and 2. a function to check the recent updates from a list of YouTube channels and return the links to the newly uploaded ones.

And given the recent release of the Llama3.1-8B model, I also would like to build the application on top of an LLM running on my own computer, instead of relying on closed models like OpenAI’s GPT models.

Summarizing the content of a YouTube video

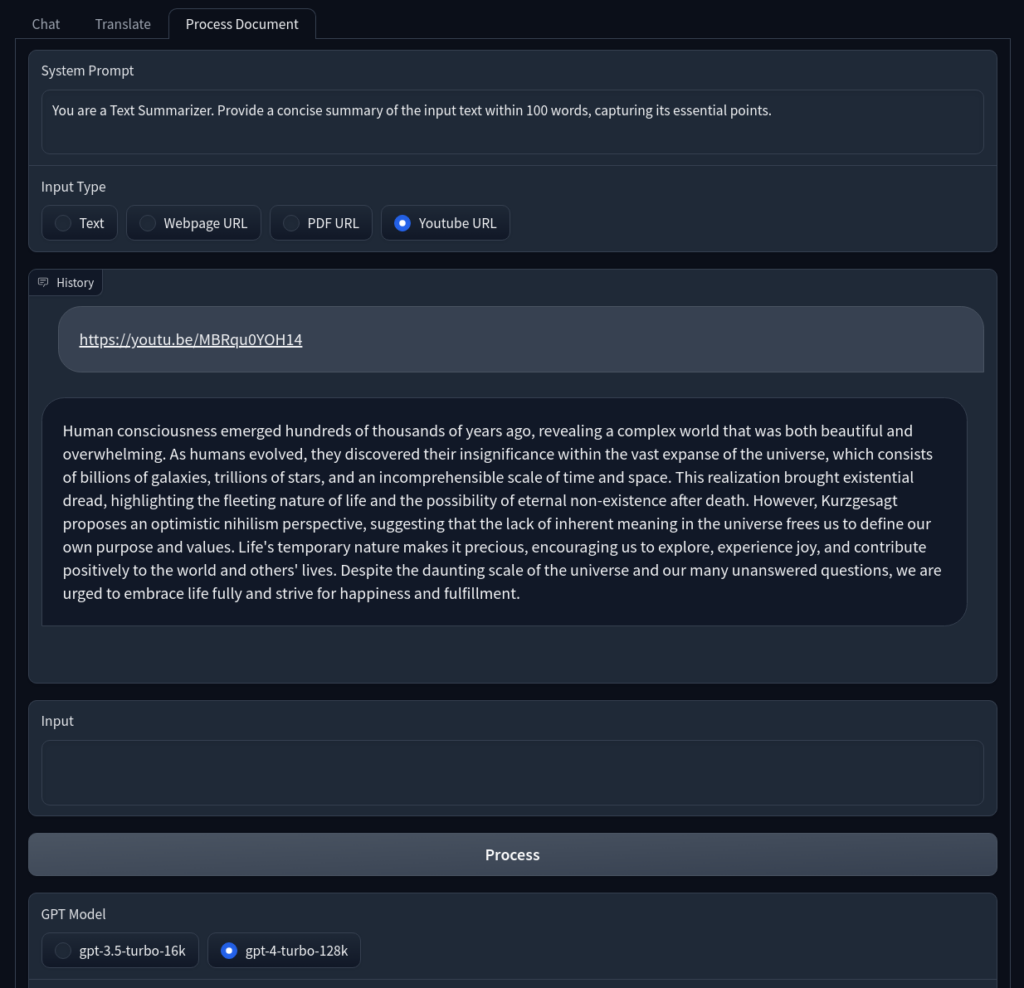

I already have built a web application with the function for YouTube video summarization (https://github.com/shulik7/HomeGPT) and I use it quite often.

For this application, we don’t need the web UI, and I would like to use Ollama to run the local LLM models. Switching from the OpenAI API to local LLM models is quite simple with Ollama and the Langchain’s Ollama module.

from langchain_community.llms import Ollama

class ModelHelper:

@staticmethod

def get_model(model="llama3.1"):

return Ollama(model=model)The code uses local LLM models to summarize Youtube video contents via transcripts. It will look for an English or Chinese transcript for a video, as I only watch videos in these two languages. Besides, we want to translate the transcript into English if necessary because Llama3.1 generates way better output in English than in Chinese in my tests. This may not be necessary with an LLM model that performs decently in both languages.

import sys

from langchain.chains import LLMChain, StuffDocumentsChain

from langchain_community.document_loaders import YoutubeLoader

from langchain_core.prompts import PromptTemplate

from model_helper import ModelHelper

class TranscriptProcessor:

def __init__(self, model):

self.model = model

def process(self, input):

system_prompt = "please summarize "

prompt_template = system_prompt + "{text}"

prompt = PromptTemplate.from_template(prompt_template)

llm_chain = LLMChain(llm=self.model, prompt=prompt)

chain = StuffDocumentsChain(llm_chain=llm_chain, input_key="text")

loader = YoutubeLoader.from_youtube_url(

input,

add_video_info=True,

language=["en", "en-US", "zh", "zh-Hans", "zh-Hant", "zh-TW"],

translation="en",

)

youtube_transcript = loader.load()

return chain.invoke(youtube_transcript)

if __name__ == "__main__":

input = sys.argv[1]

processor = TranscriptProcessor(ModelHelper.get_model("llama3.1"))

response = processor.process(input)

print(response["output_text"])Retrieving recent videos from YouTube channels

We will supply this app with a list of YouTube channels, and it will check for new content available from each of these channels within a given time frame and summarize these recent videos, if any exist.

First, we create the YouTubeChannel class, which can find information on recent videos from a channel by parsing the web page of the channel.

import requests

import re

import sys

class YoutubeChannel:

base_url = "https://www.youtube.com/watch?v="

videos_html_suffix = "/videos"

def __init__(self, url):

self.url = url

self.videos_html = self.get_videos_html()

self.name = self.get_name()

self.videos_info = self.get_videos_info()

def get_name(self):

if self.videos_html == "":

self.videos_html = self.get_videos_html()

return re.search("<title>(.+)\ -\ YouTube</title>", self.videos_html).groups()[

0

]

def get_videos_html(self):

return requests.get(self.url + self.videos_html_suffix).text

def get_videos_info(self):

if self.videos_html == "":

self.videos_html = self.get_videos_html()

title_and_info = [

x.rsplit(" by ", 1)

for x in re.findall(

'(?<={"label":")[^}]*?(?="}\}\},"descriptionSnippet")', self.videos_html

)

]

urls = re.findall('{"videoRenderer":{"videoId":"(.+?)",', self.videos_html)

return [

(x[0], re.search("views\ (.+?)\ ago", x[1]).groups()[0], self.base_url + y)

for x, y in zip(title_and_info, urls)

]

if __name__ == "__main__":

channel_url = sys.argv[1]

youtube_channel = YoutubeChannel(channel_url)

print(f"Channel name: {youtube_channel.name}")

for i in youtube_channel.videos_info:

print(i)

Comparing time strings using the Llama 8B model

Then, we would like to filter the videos based on when they were uploaded. This information is presented as “1 hour ago,” “2 days ago,” “9 days ago,” “4 weeks ago,” or “3 months ago.” If we are going to check for new videos on a weekly basis, we should only keep those released in the past week. So, among these examples, only the videos uploaded “1 hour ago” and “2 days ago” need to be kept. We need a piece of code that checks whether the time represented in different units is within the timeframe provided to the application. Although this can be easily accomplished using a Python library to convert the time representation into the same unit and then comparing the values, I would like to see how this can be “simplified” by leveraging an LLM.

By saying “simplified,” I don’t mean using fewer CPU cycles to get the answer, but reducing the burden on the human programmer’s side to find the solution. It is simpler to just ask the LLM whether the amount of time represented by the string “9 days” is shorter than that represented by the string “1 week,” instead of trying to find a library and learning how to do this with it. Indeed, this latter strategy is not that difficult in this example, and with AI-assisted programming tools like GitHub Copilot, it is becoming simpler for human programmers to work with libraries they are not very familiar with. The whole point here is to explore the use of LLMs as general solutions for programming problems where they may be a good fit.

My first attempts involved asking the LLM directly the question we have at hand, and receiving the answer simply in a yes/no, or 1/0 format. Unfortunately, Llama3.1 couldn’t excel at this seemingly simple task. It made mistakes and didn’t output the answers in a consistent format. I need to say this is not necessarily the fault of LLMs, as I tried using GPT-4 for the same task, which performed extremely well. It is more about the limited performance of smaller-sized, open-source LLMs. I only have a single RTX 4090 in my PC, so the 8B model of Llama 3.1 is already the largest one I could fit into the VRAM. I could run the larger 70B model by off-loading some layers into the main RAM, but the token generation speed had been too slow to be practical.

Still, I wanted to have some fun by making it work. First, I simplified the problem a bit: instead of asking the model to compare the time represented by the two strings directly, I asked it to convert a time string into a number in minutes. Then, I used a prompt engineering trick to ask the LLM to solve the problem step by step. It turned out the model could get the correct answers when it listed the reasoning process step by step. The remaining issue was that the model needed to output its reasoning process, even though it was instructed to just provide the final answer as a number. I think this is actually how this prompt engineering trick can work. So, I created a second function to ask the model to extract the final answer from the output of the first LLM query, which is the entire reasoning process.

Combining these two rounds of queries, we can convert the input time string into the number of minutes. We will run this conversion for both the desired timeframe (e.g., 1 week) and the time indicating a video’s “age” (e.g., 5 days) into minute numbers, and then simply compare the two integers. The Python code for converting the time strings is given below.

from langchain.prompts import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.chains import LLMChain

from model_helper import ModelHelper

import sys

class TimeConverter:

def __init__(self, model):

self.model = model

def to_minutes(self, text):

return self.extract_result(self.convert_time(text))

def convert_time(self, text):

system_prompt = f"""Please convert the time duration mentioned in the

text into minutes. Do the calculation step by step,

and then output the final result."""

return self.get_response(text, system_prompt)

def extract_result(self, text):

system_prompt = f"""Please extract the number in the final result and

output nothing else but that number with digits only."""

return int(self.get_response(text, system_prompt).replace(",", ""))

def get_response(self, message, system_prompt):

prompt = self.get_prompt(system_prompt)

conversation = LLMChain(

llm=self.model,

prompt=prompt,

)

return conversation.predict(input=message)

def get_prompt(self, system_prompt):

messages = [SystemMessagePromptTemplate.from_template(system_prompt)]

messages.append(HumanMessagePromptTemplate.from_template("{input}"))

return ChatPromptTemplate(messages=messages)

if __name__ == "__main__":

text = sys.argv[1]

converter = TimeConverter(ModelHelper.get_model())

convert_result = converter.convert_time(text)

result_number = converter.extract_result(convert_result)

print(convert_result)

print(result_number)

print(converter.to_minutes(text))

Putting everything together

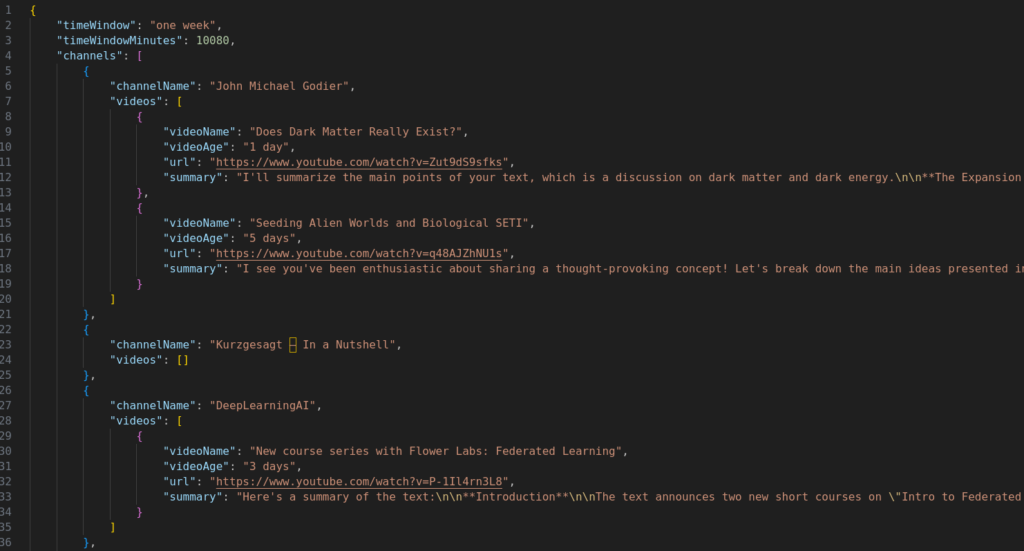

Now, let’s compile everything to create the main script that checks recent updates from a list of YouTube channels within a specified timeframe and generates summaries of the new videos.

To test the code, I compiled a list of my favorite YouTube channels and limited the timeframe to one week. Below are two channels from the list. The first had two videos uploaded one and five days ago, respectively, at the time of testing, but the second had no videos uploaded within one week.

The JSON output shown below correctly captured the two most recent videos from John Michael Godier, and no video from Kurzgesagt.

Conclusions

The code is available at YouTube_summary. In its current form, I need to launch it manually to check new content from my favorite YouTubers. Further improvements include creating a cloud service to perform regular checks and send me the summaries.

Another thing to keep in mind is that content creators rely on internet traffic and ad clicks to earn money. By extracting key information from YouTube videos without actually watching the videos, we bypass the business model of how YouTubers make money. Although I would like to save time and not be distracted by content pushed to me by YouTube’s algorithm, to be fair, we should support our favorite YouTubers through alternative ways, such as joining channel memberships, supporting them on other platforms (e.g., Patreon), or purchasing items from their shops.

(The majority of this article was drafted in July 2024)