I have successfully deployed a basic chat service utilizing OpenAI’s Python API. While this allows for straightforward integration with models like GPT-3.5 — and GPT-4, once it becomes publicly accessible — the Language Model (LLM) itself lacks the capability to retain conversational context. This implies that each interaction is isolated, giving the chatbot the characteristics of an amnesiac who instantly forgets prior conversations.

Fortunately, there are extant solutions to address this memory gap. For instance, we can use LangChain, a robust Python library designed to sustain a record of recent conversational history when interacting with LLMs. By doing so, the model’s responses will not only be based on the user’s immediate input but also take into account prior interactions, to a certain extent. Although LangChain offers additional advanced features, my primary aim is to enhance the chatbot’s conversational fluidity at this stage.

Another limitation of my initial implementation was the use of basic HTML and JavaScript libraries for the web interface. Given that I am primarily a Python developer, this setup quickly became a developmental bottleneck, particularly when adding specialized features. To address this, I will be leveraging Gradio, a Python library geared towards streamlining the development of web interfaces for machine learning applications. This approach will accelerate the integration of new functionalities in a more efficient manner.

Implementing Chat Memory with LangChain

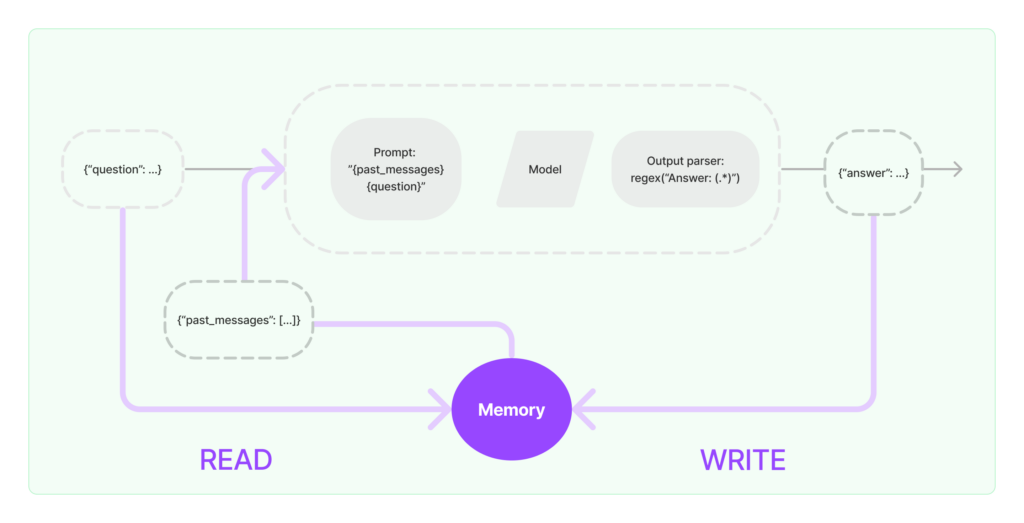

A memory system serves as a repository for prior interactions between a user and a LLM. In a typical implementation, the system augments the user’s current input with information from previous conversations to formulate a new prompt. This enhanced prompt is then sent to the LLM for completion. Subsequently, the memory system updates itself with both the user’s new input and the AI model’s completion. The following illustrates the functionality of LangChain’s memory system.

LangChain offers multiple memory configurations, which can be summarized as follows:

- ConversationBufferMemory: This configuration retains a complete buffer of past conversations. However, this method can become resource-intensive as the length of the conversation grows.

- ConversationBufferWindowMemory & ConversationTokenBufferMemory: These configurations limit the memory to either the most recent ‘k’ conversational exchanges or a specific number of tokens, respectively.

- ConversationSummaryMemory: This configuration employs the LLM to generate summaries of prior interactions, which are then used to update the memory.

For my project, I opted for the ConversationBufferWindowMemory setting with ‘k’ configured to 5. This setup yielded satisfactory results in my preliminary tests.

Simplifying User Interface Development via Gradio

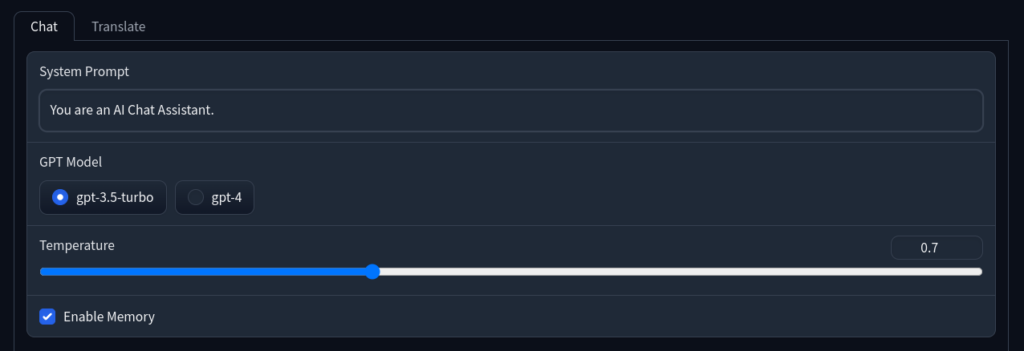

Gradio streamlines the process of web interface development using Python, offering high-level functions for crafting web elements that integrate effortlessly with existing Python code. Customizing the web interface became a seamless experience thanks to Gradio’s flexibility.

A notable omission in my prior web interface was a system prompt, which guides the LLMs on how to generate responses. The system prompt wasn’t configurable in ChatGPT until the introduction of the “custom instructions” feature a few months ago. This feature allows users to provide details about themselves and specify how they’d like the model to respond. However, it functions more as a global setting, making it inconvenient to switch between different instructions.

To overcome this limitation, Gradio enabled me to add a text box for the system prompt, defaulting to “You are an AI Chat Assistant.” I intentionally kept it concise to minimize overhead during conversations with the LLM. Additionally, I integrated a checkbox to toggle the conversation memory on or off, allowing for more cost-effective usage, especially for specific LLM tasks that I discuss below.

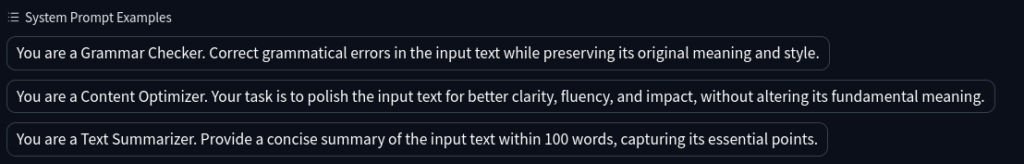

Although users can type any system prompt into the text box, I have predefined several system prompts that I use frequently:

- For grammar correction without additional changes to the user’s input: “You are a Grammar Checker. Correct grammatical errors in the input text while preserving its original meaning and style.”

- For writing improvement that includes more dramatic changes as well as grammar checking: “You are a Content Optimizer. Your task is to polish the input text for better clarity, fluency, and impact, without altering its fundamental meaning.”

- For content summarization: “You are a Text Summarizer. Provide a concise summary of the input text within 100 words, capturing its essential points.”

Gradio allows you to list these predefined system prompts as examples, and you can switch between them with a simple mouse click. These examples can be edited once selected. This is particularly useful for tasks like content summarization, where I often want to adjust the target length to control the level of detail in the summary.

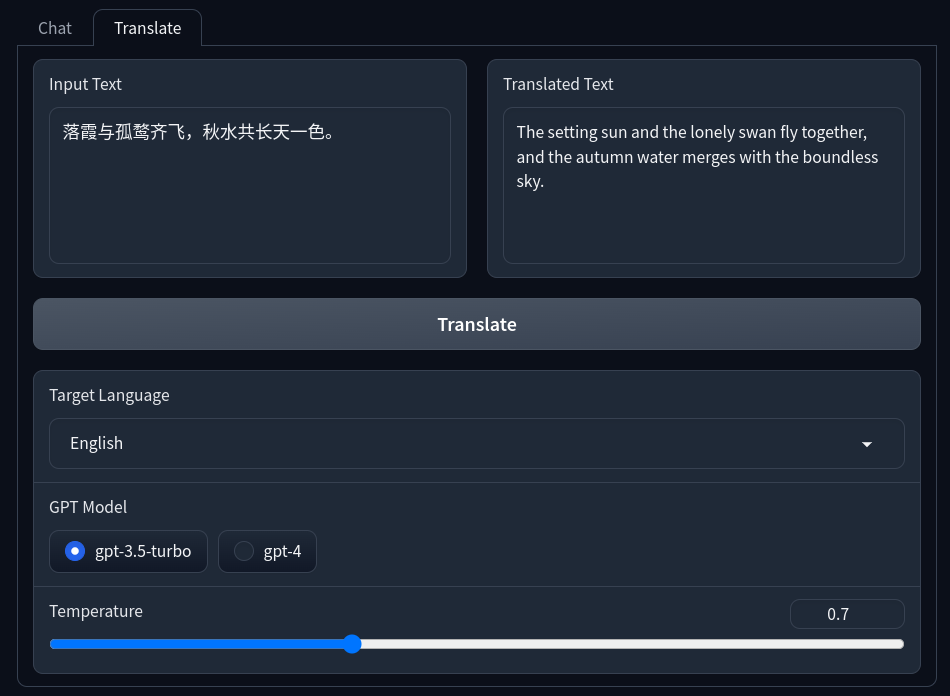

Another frequent task I assign to the LLM is language translation. To facilitate this, I desired an interface that could display both the original and translated texts side-by-side. Gradio makes this achievable with ease by allowing the creation of a dedicated tab for this purpose.

Finally, it’s worth noting that both system prompts and user input can guide LLMs to similar outcomes. However, I prefer using system prompts for their neat and organized presentation.

Subscription to ChatGPT Plus Cancelled

With the integration of conversational memory and a tailored Gradio interface for routine tasks, my home-based GPT service has become a viable alternative to my ChatGPT subscription, particularly once GPT-4 is fully released to the public. While there are still key features missing — such as external internet access and real-time information updates akin to ChatGPT plugins — I believe these functionalities could be readily incorporated. Notably, Gradio apps are easily hostable on Hugging Face, and LangChain provides support for ChatGPT plugins.

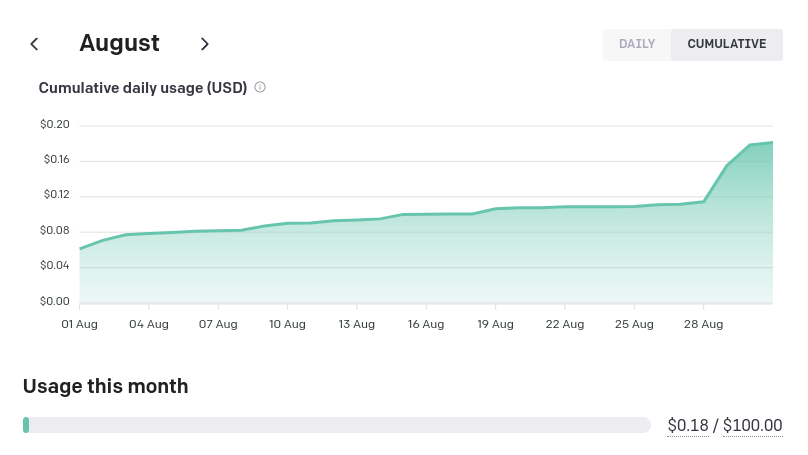

I reviewed my GPT API usage in August, which totaled only 18 cents for both my development tests and my wife’s utilization of the service. Though costs may rise once GPT-4 becomes available and I transition fully to my home-built service, I anticipate that they will remain far below the $20 monthly subscription fee for ChatGPT Plus.

As such, I decided to cancel my subscription. But beyond the financial aspect, the journey of setting up, customizing, and continually refining this home-based service has been a fulfilling learning and exploratory experience for me.

The updated implementation is available at https://github.com/shulik7/HomeGPT