My wife and I are progressively adapting to the ChatGPT service, with only I currently holding the subscription. I’m keen on granting her access too, but with no family plan available, options are limited. Sharing an account, even if permitted by OpenAI, isn’t ideal, as we value the privacy of our chat history. Yet, subscribing individually feels financially burdensome.

A promising solution lies in OpenAI’s GPT API service. It offers an excellent alternative to access GPT models for our needs. The same GPT API account can cater to multiple users, and importantly, it follows a pay-as-you-go model. Cost only incurs with usage, making it a fitting choice for my wife’s current requirements.

Exploring a Simplified Approach Using OpenAI’s Official API

Upon installation of the OpenAI Python library the process of submitting a prompt to their API to receive a response becomes quite straightforward, as demonstrated below.

import os

import openai

from dotenv import load_dotenv, find_dotenv

# read env variables from the local .env file

_ = load_dotenv(find_dotenv())

openai.api_key = os.getenv('OPENAI_API_KEY')

def get_chat_response(prompt, model="gpt-3.5-turbo", temperature=1):

messages = [{"role": "user", "content": prompt}]

response = openai.ChatCompletion.create(

model=model, messages=messages, temperature=temperature

)

return response

response = get_chat_response("Which version of the GPT model do you utilize?")

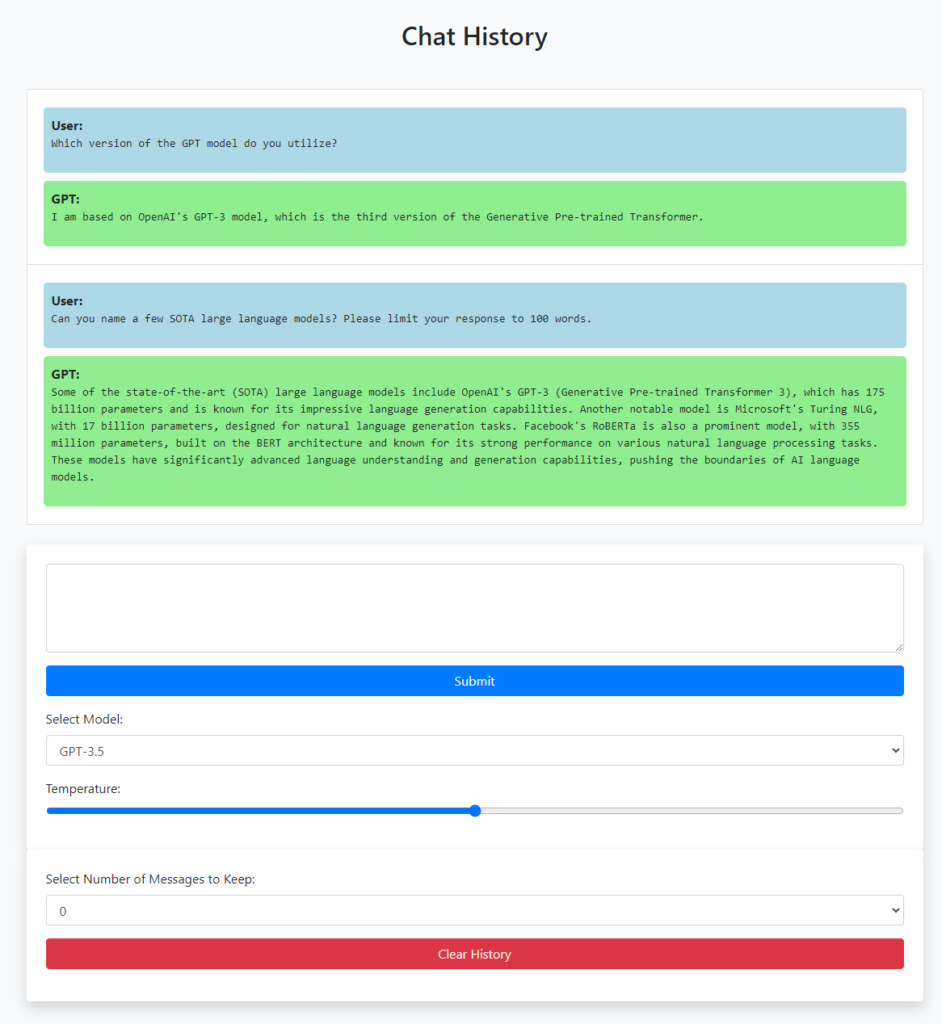

print(response.choices[0].message["content"])The subsequent phase involves constructing a web service to facilitate the submission of prompts and the retrieval of completions through a web browser interface. Although my past experience with Flask is limited to a toy project undertaken years ago, leaving me with virtually no practical knowledge, this did not pose a significant obstacle. With the assistance of ChatGPT, I managed to develop a basic Flask service through several iterations. This service maintains a chat history and allows users to erase specific entries or clear the entire history as needed.

@app.route("/chat", methods=["GET", "POST"])

def form_post():

if "history" not in session:

session["history"] = []

if request.method == "POST":

prompt = request.form["text"]

model = request.form.get("model")

temperature = float(request.form.get("temperature"))

response, error_message = try_get_chat_response(prompt, model, temperature)

output = (

error_message

if response is None

else response.choices[0].message["content"]

)

session["history"].append({"User": prompt, "GPT": output})

session["model"] = model

session["temperature"] = temperature

session.modified = True

return render_template(

"home.html",

history=session["history"],

model=session["model"],

temperature=session["temperature"],

)

else:

return render_template(

"home.html",

history=session["history"],

model=session.get("model", "gpt-3.5-turbo"),

temperature=session.get("temperature", 1),

)

@app.route("/clear", methods=["POST"])

def clear_history():

keep = int(request.form.get("keep", 0))

if keep == 0:

session["history"] = []

else:

# Keep only the last 'keep' messages

session["history"] = session["history"][-keep:]

session.modified = True

# Redirect back to the chat

return redirect(url_for("form_post"))We additionally require a web interface to receive input from the user (e.g., my wife or myself) and to display the response from the GPT model. Starting with a basic web page, I was able to gradually refine it in line with ChatGPT’s guidance. Considering my lack of prior experience in embedding JavaScript into HTML, I find myself quite content with the final outcome. Since I established the service strictly for home use, I did not have to devote extensive attention to aspects such as workload balancing or network security.

Recognizing the Limitations of the Current Implementation

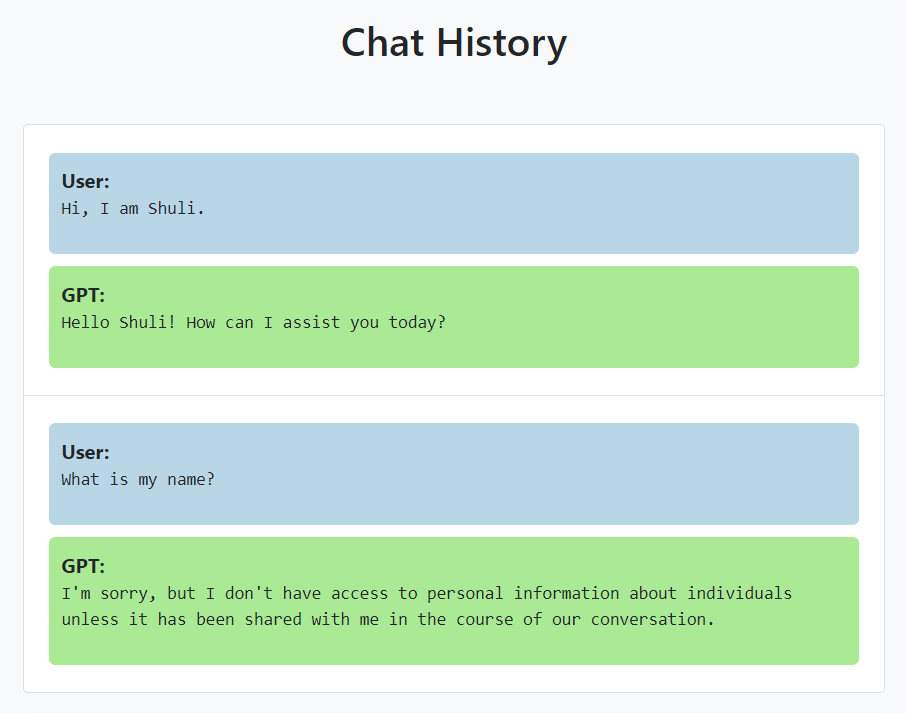

Regrettably, the aforementioned creation falls short of being a true chatbot akin to the ChatGPT web service, as it lacks the ability to retain information from previous interactions.

This limitation stems from the statelessness inherent to large language models such as OpenAI’s GPT-3 and GPT-4. These models lack memory of prior requests, necessitating extra effort on the user’s end to maintain context. Fortunately, widely-used libraries for language model development can effectively address this prevalent requirement, making it a noteworthy addition to my to-do list.

Aside from its inability to remember conversation context, this simple web service lacks a sophisticated interface, and it doesn’t offer the advanced features currently provided by OpenAI’s ChatGPT Plus subscription, such as plugins and custom instructions. Nevertheless, it adequately fulfills many of our requirements, including proofreading and enhancing written material, summarizing lengthy text passages, generating draft content as per specific requests, seeking knowledge, and more. I appreciate the pay-as-you-go structure of the API-based usage, which proved to be economical, costing me less than 10 cents during the development phase of this service. Moreover, there is flexibility to augment the service with additional functionalities, including conversation memory, pre-defined tasks with tailored instructions, and the possibility of eventually hosting it on the internet.

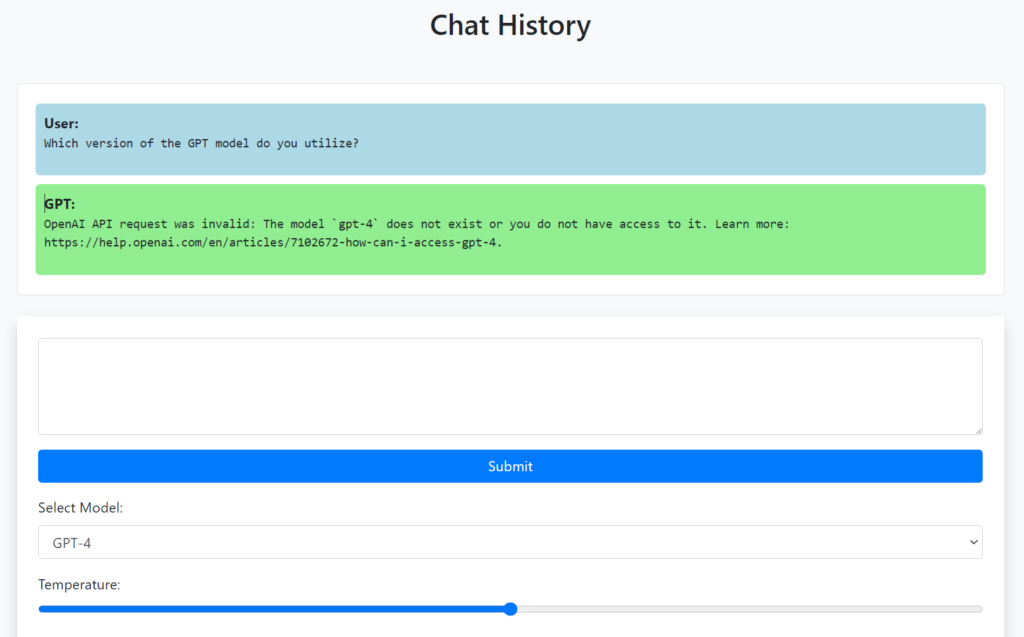

Yet another aspect I’m currently missing is the access to the GPT-4 model, which I often utilize for tasks beyond elementary functions such as grammar checking and correction. If we specify the “gpt-4” model in our request, the following API error message is returned. Thankfully, OpenAI plans to grant access to all developers in the near future, as noted on the web page linked in the error message.

I intend to maintain my $20 monthly subscription while continuing to enhance this straightforward service. Perhaps, in time, I may joyfully cancel the subscription and depend solely on this homemade application. Not only would this decision save me some money, but more significantly, the process of developing and experimenting with this “AI application” has been immensely enjoyable—especially if we choose to use such a trendy term!

The code for this project can be accessed at https://github.com/shulik7/HomeGPT/tree/implement/openai_api.

Your article was not only informative but also very well-written. You have a talent for communication.